创建 map

创建 map 的语法很简单

// 不指定map长度

ageMp := make(map[string]int)

// 指定map长度

ageMp := make(map[string]int, 8)

// ageMp 为 nil,不能向其添加元素,会直接panic

var ageMp map[string]int

通过汇编语言,我们能够跟踪到 map 的创建最终会调用 runtime.makemap 方法

// makemap implements Go map creation for make(map[k]v, hint).

// If the compiler has determined that the map or the first bucket

// can be created on the stack, h and/or bucket may be non-nil.

// If h != nil, the map can be created directly in h.

// If h.buckets != nil, bucket pointed to can be used as the first bucket.

// 翻译:makemap实现了make(map[k]v, hint)这种形式语法的Go map创建。

// 如果编译器已经确定该map或者第一个bucket能够在这个栈上创建,那么h和(或)bucket可能为非nil。

// 如果h不是nil,那么该map能直接创建在这个h上。

// 如果h.buckets不是nil, 则被指向的bucket能被用来做第一个bucket

func makemap(t *maptype, hint int, h *hmap) *hmap {

// 计算指定的大小所需要的内容是否超出出系统允许的最大分配大小

mem, overflow := math.MulUintptr(uintptr(hint), t.bucket.size)

if overflow || mem > maxAlloc {

hint = 0

}

// 初始化hmap,并指定随机种子

if h == nil {

h = new(hmap)

}

h.hash0 = fastrand()

// Find the size parameter B which will hold the requested # of elements.

// For hint < 0 overLoadFactor returns false since hint < bucketCnt.

// 通过overLoadFactor(hint, B)函数找到一个能装下指定map大小个元素个数的最小B,要满足 装载因子*2^B < hint

B := uint8(0)

for overLoadFactor(hint, B) {

B++

}

h.B = B

// allocate initial hash table

// if B == 0, the buckets field is allocated lazily later (in mapassign)

// If hint is large zeroing this memory could take a while.

// 开始对hash table进行初始化。如果B==0则buckets 进行懒初始化操作(赋值的时候才进行初始化),如果B值特别大,则初始化需要一段时间,主要通过 makeBucketArray() 函数实现

if h.B != 0 {

var nextOverflow *bmap

h.buckets, nextOverflow = makeBucketArray(t, h.B, nil)

if nextOverflow != nil {

h.extra = new(mapextra)

// 溢出桶地址赋值

h.extra.nextOverflow = nextOverflow

}

}

return h

}

// makeBucketArray initializes a backing array for map buckets.

// 1<<b is the minimum number of buckets to allocate.

// dirtyalloc should either be nil or a bucket array previously

// allocated by makeBucketArray with the same t and b parameters.

// If dirtyalloc is nil a new backing array will be alloced and

// otherwise dirtyalloc will be cleared and reused as backing array.

// 翻译:makeBucketArray为map buckets初始化一个备用数组

// 2^b是该buckets长度的最小值

// dirtyalloc之前应该是nil或者bucket数组

// 如果dirtyalloc 为nil,将分配一个新的后备数组,否则将清除dirtyalloc 并作为后备数组重用。

func makeBucketArray(t *maptype, b uint8, dirtyalloc unsafe.Pointer) (buckets unsafe.Pointer, nextOverflow *bmap) {

base := bucketShift(b) // base = 2^b

nbuckets := base

// For small b, overflow buckets are unlikely.

// Avoid the overhead of the calculation.

// 对于小的b,不太可能会需要溢出桶,可以避免这部分计算开销

// 对于b>4(桶的数量> 2^4)的话,则需要创建2^(b-4)个溢出桶。

if b >= 4 {

// 加上溢出桶的数量

nbuckets += bucketShift(b - 4)

// 总大小

sz := t.bucket.size * nbuckets

// 针对所需要的内存大小,mallocgc将分配的内存块大小

up := roundupsize(sz)

if up != sz {

// 如果总大小和mallocgc将分配的内存块大小不同,以mallocgc分配的为准,计算nbuckets

nbuckets = up / t.bucket.size

}

}

if dirtyalloc == nil {

buckets = newarray(t.bucket, int(nbuckets))

} else {

// dirtyalloc was previously generated by

// the above newarray(t.bucket, int(nbuckets))

// but may not be empty.

buckets = dirtyalloc

size := t.bucket.size * nbuckets

if t.bucket.ptrdata != 0 {

memclrHasPointers(buckets, size)

} else {

memclrNoHeapPointers(buckets, size)

}

}

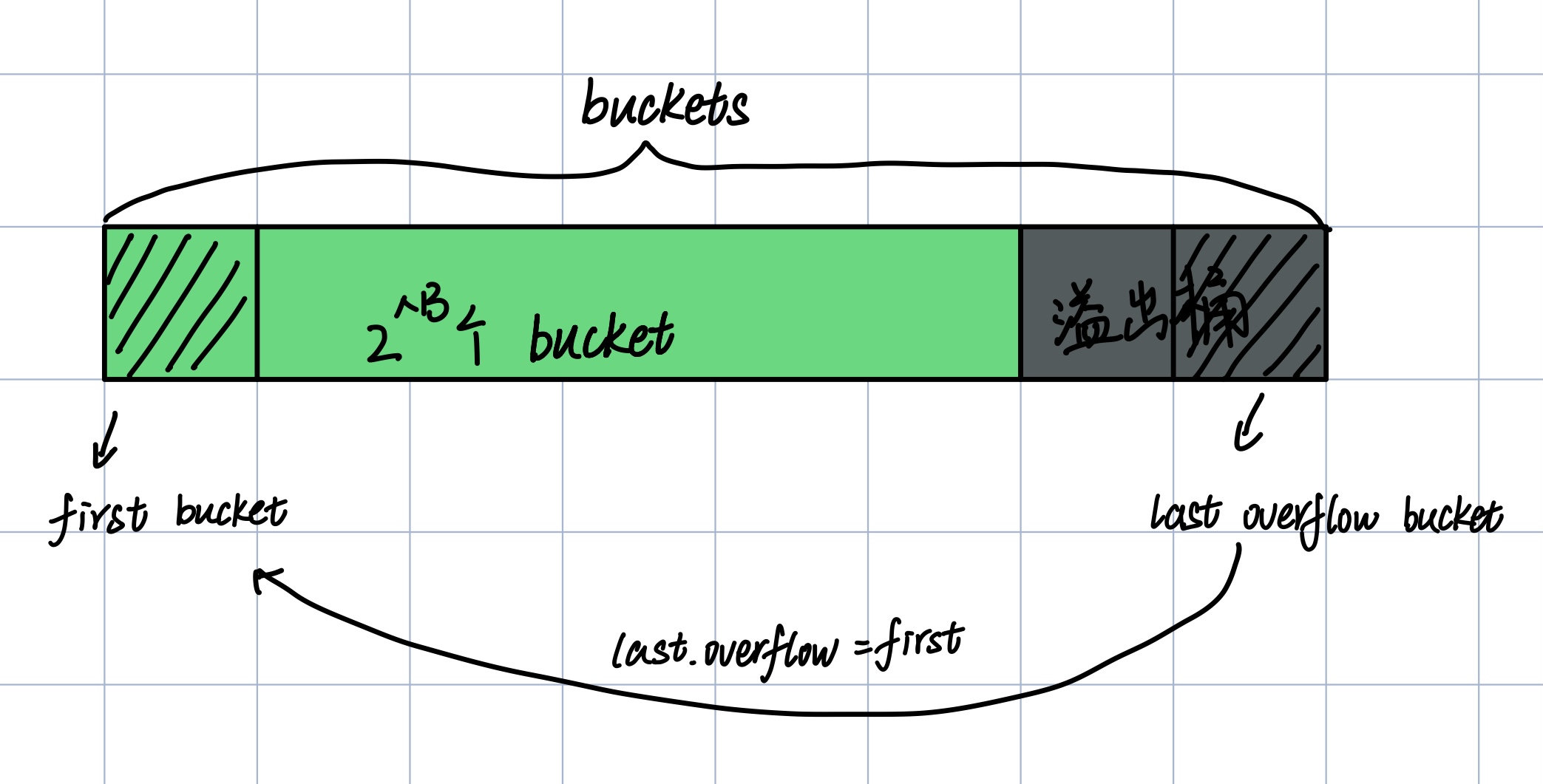

if base != nbuckets {

// We preallocated some overflow buckets.

// To keep the overhead of tracking these overflow buckets to a minimum,

// we use the convention that if a preallocated overflow bucket's overflow

// pointer is nil, then there are more available by bumping the pointer.

// We need a safe non-nil pointer for the last overflow bucket; just use buckets.

// 我们提前分配了一些溢出桶,为了使得追踪溢出桶的开销最小,我们这样约定:

// 如果溢出桶的overflow指针为nil,那么代表还有空间在出现哈希碰撞时使用,

// 溢出桶的最后一个桶的overflow需要指向一个安全的非空指针,这里指向了buckets的第一个桶

// 具体的用处在map写入时,需要创建溢出桶时会用到,具体在newoverflow函数中

nextOverflow = (*bmap)(add(buckets, base*uintptr(t.bucketsize))) // 指向第一个溢出桶

last := (*bmap)(add(buckets, (nbuckets-1)*uintptr(t.bucketsize))) // 指向最后一个溢出桶

last.setoverflow(t, (*bmap)(buckets)) // 溢出桶的最后一个桶的overflow指向了buckets的第一个桶

}

return buckets, nextOverflow

}

从上面代码可以得知,2^B 并不等于 buckets 的大小,它只是创建 buckets 时的 base 部分,在该部分后,还有溢出桶。所以正常桶和溢出桶在内存上的分布是连续的,溢出桶的指针记录在了 hmap 的 extra 字段中。

map 的读取

对于 map 的读取操作,最终是调用了函数 runtime.mapaccess1()和 runtime.mapaccess2() 。两者的唯一区别就是返回值不一样,runtime.mapaccess1() 返回的是一个值,runtime.mapaccess2() 返回的是两个值,第二个值表示 key 是否在 map 中存在。即我们常用的两种 map 取值方法。

v := ageMap["yicixin"]

v, ok := ageMap["yicixin"]

所以我们只需要介绍runtime.mapaccess1(),

// mapaccess1 returns a pointer to h[key]. Never returns nil, instead

// it will return a reference to the zero object for the elem type if

// the key is not in the map.

// NOTE: The returned pointer may keep the whole map live, so don't

// hold onto it for very long.

// mapaccess1返回指向h[key]的指针,永远不会返回nil,如果key不存在,就会返回元素对应类型的空值。

// 注意:返回的指针可能会使得整个map处于活动状态,所以不要长时间持有它

func mapaccess1(t *maptype, h *hmap, key unsafe.Pointer) unsafe.Pointer {

if raceenabled && h != nil {

callerpc := getcallerpc()

pc := funcPC(mapaccess1)

racereadpc(unsafe.Pointer(h), callerpc, pc)

raceReadObjectPC(t.key, key, callerpc, pc)

}

if msanenabled && h != nil {

msanread(key, t.key.size)

}

// 判断h是否为nil或者h.count值是否为0,如果h为nil则表示未初始化,则可能panic,如果h.count=0,则表示map为空,则直接返回一个zero值。

if h == nil || h.count == 0 {

if t.hashMightPanic() {

t.hasher(key, 0) // see issue 23734

}

return unsafe.Pointer(&zeroVal[0])

}

// 考虑是否处于并发读写状态,否则产生panic

if h.flags&hashWriting != 0 {

throw("concurrent map read and map write")

}

// 根据键key计算hash值

hash := t.hasher(key, uintptr(h.hash0))

// 计算低B位的掩码bucketMask(h.B),比如 B=5,那 m 就是31,低五位二进制是全1

m := bucketMask(h.B)

// 计算当前bucket的地址

b := (*bmap)(add(h.buckets, (hash&m)*uintptr(t.bucketsize)))

// 根据h.oldbuckets判断是否处于扩容中,如果不是nil则表示当前map正处于扩容状态中

if c := h.oldbuckets; c != nil {

if !h.sameSizeGrow() {

// There used to be half as many buckets; mask down one more power of two.

m >>= 1

}

oldb := (*bmap)(add(c, (hash&m)*uintptr(t.bucketsize)))

if !evacuated(oldb) {

b = oldb

}

}

// 计算tophash,即高八位

top := tophash(hash)

// 真正开始查找key,外层for是循环bucket及溢出桶overflow,内层for是循环桶内的8个slot

bucketloop:

for ; b != nil; b = b.overflow(t) {

for i := uintptr(0); i < bucketCnt; i++ {

if b.tophash[i] != top {

if b.tophash[i] == emptyRest {

break bucketloop

}

continue

}

k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize))

if t.indirectkey() {

k = *((*unsafe.Pointer)(k))

}

if t.key.equal(key, k) {

e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize))

if t.indirectelem() {

e = *((*unsafe.Pointer)(e))

}

return e

}

}

}

return unsafe.Pointer(&zeroVal[0])

}

key 的定位公式:

k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize))

value 的定位公式:

e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize))

其中 dataOffset 表示 第一个 key 相对于 bmap 的偏移量,结构体如下

dataOffset = unsafe.Offsetof(struct {

b bmap

v int64

}{}.v)

所以键的地址=当前 bucket 的起始位置(unsafe.Pointer(b)) + 第一个 key 的偏移量(dataOffset)+当前 slot 索引值(i) * 每个键的大小(uintptr(t.keysize))

而对于值来说,由于 bmap.values 在 b.map.keys 后面,所以要先将 8 个键的地址全部计算上才行,同样值类型也有自己的大小 t.elemsize

bucket 的几种状态码

emptyRest = 0 // 当前cell为空, 并且它后面的所有cell也为空, 包括溢出桶overflow

emptyOne = 1 // 当前cell为空

evacuatedX = 2 // key/elem is valid. Entry has been evacuated to first half of larger table.

evacuatedY = 3 // 扩容相关,第二部分迁移完毕。

evacuatedEmpty = 4 // 当前cell为空,且迁移完成。

minTopHash = 5 // tophash最小值,如果在调用 tophash(hash)时,计算出的值小于此值,则会加上此值

// tophash calculates the tophash value for hash.

func tophash(hash uintptr) uint8 {

top := uint8(hash >> (sys.PtrSize*8 - 8))

if top < minTopHash {

top += minTopHash

}

return top

}

map 写入

与 map 写入相关的 runtime 函数是runtime.mapassign(),对于某些 key 类型,runtime 还有特定的函数。

mapassign_faststr(t *maptype, h *hmap, s string) unsafe.Pointer

mapassign_fast64(t *maptype, h *hmap, key uint64) unsafe.Pointer

mapassign_fast32(t *maptype, h *hmap, key uint32) unsafe.Pointer

这是runtime.mapassign函数:

// Like mapaccess, but allocates a slot for the key if it is not present in the map.

func mapassign(t *maptype, h *hmap, key unsafe.Pointer) unsafe.Pointer {

// 空map写入会触发panic

if h == nil {

panic(plainError("assignment to entry in nil map"))

}

// raceenabled-是否启用数据竞争检测

if raceenabled {

callerpc := getcallerpc()

pc := funcPC(mapassign)

racewritepc(unsafe.Pointer(h), callerpc, pc)

raceReadObjectPC(t.key, key, callerpc, pc)

}

if msanenabled {

msanread(key, t.key.size)

}

// 如果并发写入会触发panic

if h.flags&hashWriting != 0 {

throw("concurrent map writes")

}

// 计算hash值

hash := t.hasher(key, uintptr(h.hash0))

// Set hashWriting after calling t.hasher, since t.hasher may panic,

// in which case we have not actually done a write.

// 设置写入标志位

h.flags ^= hashWriting

if h.buckets == nil {

h.buckets = newobject(t.bucket) // newarray(t.bucket, 1)

}

again:

// 定位bucket

bucket := hash & bucketMask(h.B)

if h.growing() {

growWork(t, h, bucket)

}

b := (*bmap)(add(h.buckets, bucket*uintptr(t.bucketsize)))

top := tophash(hash)

var inserti *uint8 // tophash的位置

var insertk unsafe.Pointer // key的地址

var elem unsafe.Pointer // val的地址

bucketloop:

for {

for i := uintptr(0); i < bucketCnt; i++ {

if b.tophash[i] != top {

if isEmpty(b.tophash[i]) && inserti == nil {

inserti = &b.tophash[i]

insertk = add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize))

elem = add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize))

}

// 当前cell为空, 并且它后面的所有cell也为空, 包括溢出桶overflow, 可以退出循环了

if b.tophash[i] == emptyRest {

break bucketloop

}

continue

}

// b.tophash[i] == top

k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.keysize))

if t.indirectkey() {

k = *((*unsafe.Pointer)(k))

}

if !t.key.equal(key, k) {

continue

}

// already have a mapping for key. Update it.

if t.needkeyupdate() {

typedmemmove(t.key, k, key)

}

elem = add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.keysize)+i*uintptr(t.elemsize))

goto done

}

// 如果还有溢出桶,继续查询溢出桶

ovf := b.overflow(t)

if ovf == nil {

break

}

b = ovf

}

// Did not find mapping for key. Allocate new cell & add entry.

// If we hit the max load factor or we have too many overflow buckets,

// and we're not already in the middle of growing, start growing.

// 如果负载因子到了最大或者有太多的溢出桶,并且不在扩容中,开始扩容

if !h.growing() && (overLoadFactor(h.count+1, h.B) || tooManyOverflowBuckets(h.noverflow, h.B)) {

hashGrow(t, h)

goto again // Growing the table invalidates everything, so try again

}

if inserti == nil {

// The current bucket and all the overflow buckets connected to it are full, allocate a new one.

// 当前bucket和所有的溢出桶都满了,创建新的溢出桶

newb := h.newoverflow(t, b)

inserti = &newb.tophash[0]

insertk = add(unsafe.Pointer(newb), dataOffset)

elem = add(insertk, bucketCnt*uintptr(t.keysize))

}

// store new key/elem at insert position

if t.indirectkey() {

kmem := newobject(t.key)

*(*unsafe.Pointer)(insertk) = kmem

insertk = kmem

}

if t.indirectelem() {

vmem := newobject(t.elem)

*(*unsafe.Pointer)(elem) = vmem

}

// 写入key

typedmemmove(t.key, insertk, key)

*inserti = top

// 哈希元素数量+1

h.count++

done:

if h.flags&hashWriting == 0 {

throw("concurrent map writes")

}

h.flags &^= hashWriting

if t.indirectelem() {

elem = *((*unsafe.Pointer)(elem))

}

return elem

}

在mapassign中并没有对 val 进行直接赋值,而是返回了 val 的地址,真正的赋值操作在哪呢?

var a = make(map[int]int, 7)

for i := 0; i < 1000; i++ {

a[i] = 99999

}

看看这段代码对应的汇编:

0x003f 00063 (m.go:9) MOVQ DX, (SP) // 第一个参数

0x0043 00067 (m.go:9) MOVQ AX, 8(SP) // 第二个参数

0x0048 00072 (m.go:9) MOVQ CX, 16(SP) // 第三个参数

0x004d 00077 (m.go:9) PCDATA $0, $1 // GC 相关

0x004d 00077 (m.go:9) CALL runtime.mapassign_fast64(SB) // 调用函数

0x0052 00082 (m.go:9) MOVQ 24(SP), AX // 返回值,即 value 应该存放的内存地址

0x0057 00087 (m.go:9) MOVQ $99999, (AX) // 把 99999 放入该地址中

map 扩容

在上文中的 map 写入操作过程中,有关于 map 扩容的代码,在这一部分细谈 map 扩容机制

if !h.growing() && (overLoadFactor(h.count+1, h.B) || tooManyOverflowBuckets(h.noverflow, h.B)) {

hashGrow(t, h)

goto again // Growing the table invalidates everything, so try again

}

在写入时,有两种情况会触发扩容:

- 负载因子超过上限,即元素个数 >= 桶个数 * 6.5,这时候说明大部分的桶可能都快满了,如果插入新元素,有大概率需要挂在 overflow 的桶上。

- 太多溢出桶,什么情况会导致太多溢出桶而又不超过负载因子上线呢?这可能发生在我们对 map 一边插入,一边删除,导致其中很多桶出现空洞,虽然使用了很多溢出桶,但是总元素个数并没有超出负载因子上限。这时 bucket 的使用率不高,值存储得比较稀疏,在查找时效率会下降,占据了大量内存却无用,等同于内存泄漏。

满足任意情况且目前不在扩容状态!h.growing()时,即会进行扩容,map 扩容的函数就是hashGrow(t, h)

针对上面两种不同情况,map 的扩容方法也是不一样的。

func hashGrow(t *maptype, h *hmap) {

// If we've hit the load factor, get bigger.

// Otherwise, there are too many overflow buckets,

// so keep the same number of buckets and "grow" laterally.

bigger := uint8(1)

// 如果不是负载因子超出上限,那么进行的是一次等量扩容,bucket的数量不变

if !overLoadFactor(h.count+1, h.B) {

bigger = 0

// sameSizeGrow,即为等量扩容

h.flags |= sameSizeGrow

}

oldbuckets := h.buckets

// 等量扩容bigger为0,bucket数不变。否则bigger为1,bucket数翻倍

newbuckets, nextOverflow := makeBucketArray(t, h.B+bigger, nil)

flags := h.flags &^ (iterator | oldIterator)

if h.flags&iterator != 0 {

flags |= oldIterator

}

// commit the grow (atomic wrt gc)

h.B += bigger

h.flags = flags

h.oldbuckets = oldbuckets

h.buckets = newbuckets

h.nevacuate = 0

h.noverflow = 0

if h.extra != nil && h.extra.overflow != nil {

// Promote current overflow buckets to the old generation.

if h.extra.oldoverflow != nil {

throw("oldoverflow is not nil")

}

h.extra.oldoverflow = h.extra.overflow

h.extra.overflow = nil

}

if nextOverflow != nil {

if h.extra == nil {

h.extra = new(mapextra)

}

h.extra.nextOverflow = nextOverflow

}

// the actual copying of the hash table data is done incrementally

// by growWork() and evacuate().

// 哈希表数据的实际复制是由 growWork() 和 evacuate() 逐步完成的

}

func growWork(t *maptype, h *hmap, bucket uintptr) {

// 确保我们移动的 oldbucket 对应的是我们马上就要用到的那一个

evacuate(t, h, bucket&h.oldbucketmask())

// 如果还在 growing 状态,再多移动一个 oldbucket

if h.growing() {

evacuate(t, h, h.nevacuate)

}

}

重要的是真正的数据迁移部分,evacuate函数。

func evacuate(t *maptype, h *hmap, oldbucket uintptr) {

b := (*bmap)(add(h.oldbuckets, oldbucket*uintptr(t.bucketsize)))

newbit := h.noldbuckets()

if !evacuated(b) {

// TODO: reuse overflow buckets instead of using new ones, if there

// is no iterator using the old buckets. (If !oldIterator.)

// xy contains the x and y (low and high) evacuation destinations.

// evacDst用于保存分配上下文

// xy 包含的是移动的目标

// x 表示新 bucket 数组的前(low)半部分

// y 表示新 bucket 数组的后(high)半部分

var xy [2]evacDst

x := &xy[0]

x.b = (*bmap)(add(h.buckets, oldbucket*uintptr(t.bucketsize)))

x.k = add(unsafe.Pointer(x.b), dataOffset)

x.e = add(x.k, bucketCnt*uintptr(t.keysize))

// 如果是等量扩容,新旧bucket是一对一,不需要两个evacDst,而当哈希表的容量翻倍时,每个旧桶的元素会都分流到新创建的两个桶中

if !h.sameSizeGrow() {

// Only calculate y pointers if we're growing bigger.

// Otherwise GC can see bad pointers.

y := &xy[1]

y.b = (*bmap)(add(h.buckets, (oldbucket+newbit)*uintptr(t.bucketsize)))

y.k = add(unsafe.Pointer(y.b), dataOffset)

y.e = add(y.k, bucketCnt*uintptr(t.keysize))

}

for ; b != nil; b = b.overflow(t) {

k := add(unsafe.Pointer(b), dataOffset)

e := add(k, bucketCnt*uintptr(t.keysize))

for i := 0; i < bucketCnt; i, k, e = i+1, add(k, uintptr(t.keysize)), add(e, uintptr(t.elemsize)) {

top := b.tophash[i]

if isEmpty(top) {

b.tophash[i] = evacuatedEmpty

continue

}

if top < minTopHash {

throw("bad map state")

}

k2 := k

if t.indirectkey() {

k2 = *((*unsafe.Pointer)(k2))

}

var useY uint8

if !h.sameSizeGrow() {

// Compute hash to make our evacuation decision (whether we need

// to send this key/elem to bucket x or bucket y).

hash := t.hasher(k2, uintptr(h.hash0))

if h.flags&iterator != 0 && !t.reflexivekey() && !t.key.equal(k2, k2) {

// If key != key (NaNs), then the hash could be (and probably

// will be) entirely different from the old hash. Moreover,

// it isn't reproducible. Reproducibility is required in the

// presence of iterators, as our evacuation decision must

// match whatever decision the iterator made.

// Fortunately, we have the freedom to send these keys either

// way. Also, tophash is meaningless for these kinds of keys.

// We let the low bit of tophash drive the evacuation decision.

// We recompute a new random tophash for the next level so

// these keys will get evenly distributed across all buckets

// after multiple grows.

useY = top & 1

top = tophash(hash)

} else {

if hash&newbit != 0 {

useY = 1

}

}

}

if evacuatedX+1 != evacuatedY || evacuatedX^1 != evacuatedY {

throw("bad evacuatedN")

}

b.tophash[i] = evacuatedX + useY // evacuatedX + 1 == evacuatedY

dst := &xy[useY] // evacuation destination

if dst.i == bucketCnt {

dst.b = h.newoverflow(t, dst.b)

dst.i = 0

dst.k = add(unsafe.Pointer(dst.b), dataOffset)

dst.e = add(dst.k, bucketCnt*uintptr(t.keysize))

}

dst.b.tophash[dst.i&(bucketCnt-1)] = top // mask dst.i as an optimization, to avoid a bounds check

if t.indirectkey() {

*(*unsafe.Pointer)(dst.k) = k2 // copy pointer

} else {

typedmemmove(t.key, dst.k, k) // copy elem

}

if t.indirectelem() {

*(*unsafe.Pointer)(dst.e) = *(*unsafe.Pointer)(e)

} else {

typedmemmove(t.elem, dst.e, e)

}

dst.i++

// These updates might push these pointers past the end of the

// key or elem arrays. That's ok, as we have the overflow pointer

// at the end of the bucket to protect against pointing past the

// end of the bucket.

dst.k = add(dst.k, uintptr(t.keysize))

dst.e = add(dst.e, uintptr(t.elemsize))

}

}

// Unlink the overflow buckets & clear key/elem to help GC.

if h.flags&oldIterator == 0 && t.bucket.ptrdata != 0 {

b := add(h.oldbuckets, oldbucket*uintptr(t.bucketsize))

// Preserve b.tophash because the evacuation

// state is maintained there.

ptr := add(b, dataOffset)

n := uintptr(t.bucketsize) - dataOffset

memclrHasPointers(ptr, n)

}

}

if oldbucket == h.nevacuate {

advanceEvacuationMark(h, t, newbit)

}

}